When was the last time you clicked through to a site? Research says it’s getting rarer and rarer (Pew). Today, many people are treating AI overviews like the destination, not the doorway. And those responses are not only shaping which brand is viewed as authoritative, but also where the business goes.

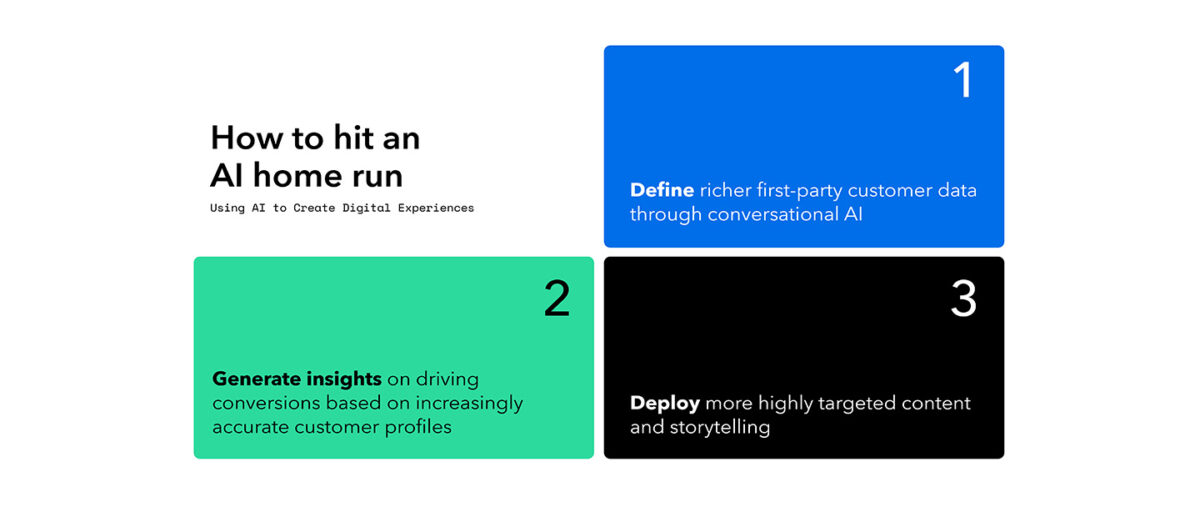

This shift isn’t just about the infrastructure of the attention economy. It’s about a whole new creation cycle for brands. Content now must move across new channels and contexts: human-to-human, human-to-machine, machine-to-machine, and machine-to-human. Commerce-driven companies have been adapting for years, but for knowledge-heavy organizations this is a step change, and marketing leaders need to update their strategy.

An AI Content Audit is the best place to start — showing how answer engines pick sources, how your content scores, and exactly where it needs fixing.

How Do AI Answer Engines Choose Sources?

Traditional content strategy has conditioned us to optimize for search queries, pageviews, and time-on-site. Those metrics still matter, but they’re no longer the whole story. Public AI systems don’t just surface pages. They synthesize, summarize, and attribute. If your best answers are buried, inconsistent, or written for people but not for answer engines, models will either misattribute your content, stitch together inaccurate claims, or cite a competitor.

At SJR, we see this play out again and again with new clients: authoritative facts are scattered across dozens (sometimes hundreds) of pages. Product details are missing transcripts or structured data, or feature a technical guide that’s comprehensive but impossible for a model to extract cleanly. The result is lower citation share in AI responses, errant answers, and lost conversions — all symptoms of a content estate that wasn’t built for the new AI-driven answer layer.

GEO/ARO/AISO: What does it all mean?

What is an AI Content Audit and How Does it Help?

An AI content audit is a focused, pragmatic test. We sample the pages that matter, we run them through both internal knowledge checks and public-model queries (ChatGPT, Google AI overviews, Perplexity), and we score each page on four practical signals: Correctness, Conciseness, Accessibility, and Simplicity. We then crosswalk those content scores with technical readiness — schema, sitemaps, media transcripts, RAG pipelines, DOM structure, fetch times — to build an implementable plan.

Put simply: The audit examines your content ecosystem as a whole — scoring Correctness, Conciseness, Accessibility, and Simplicity — to confirm whether the answers you want surfaced are actually present and consistent across your site. It then shows exactly where they fail and delivers the fixes that move the needle.

How Do We Run AI Content Audits?

To keep them simple and fast, an SJR Content Audit follows four repeatable steps designed for rapid discovery and impact.

- Align — We clarify goals and sample the priority pages you care about (audience, KPIs, and scope).

- Audit — Automated + manual tests across content, tech signals, RAG, and public models.

- Prioritize — A scored diagnosis that surfaces high-impact quick wins and mid-term fixes.

- Implement — Implementation-ready tasks, a measurement plan, and optional support to execute.

A Real Example of an AI Content Audit

In a recent audit for a global automotive newsroom, we evaluated more than 500 core questions and scraped roughly 10,400 public pages to build a custom knowledge base. The results were illuminating: More than two thirds of sampled answers matched the client’s desires, 16% were incorrect, and 16% returned no answer at all — with the biggest gaps in corporate and innovation content. Technical readiness scored low (AISO readiness 5 out of 10), driven by poor schema coverage and slow fetch times.

The remedy wasn’t rewriting everything. It was surgical: Put answer-first summaries on key pages, smart content structures for most-cited questions, and a measurement plan to prove lift via randomized page-level holdouts. Those focused changes create outsized impact — better citations, cleaner answers, and measurable improvement in referral quality.

Why Should I Care About AI Content Audits?

Notice how this piece is written: It’s built around short questions, direct answers, and clear headers. That’s intentional. It isn’t just content about AI readiness — it’s content built to be AI-friendly. Writing in question-and-answer form with clean signals is exactly how you make content easier for models to surface and cite.

Generative AI hasn’t made content less important — it’s simply made the rules of discoverability stricter. Being the brand that’s cited by answer engines is a strategic advantage: it protects trust, increases referral quality, and improves conversion. An AI Content Audit turns that advantage from theory into an actionable plan.

If you want to see the audit methodology in action and view a sample report, visit our AI Content Audit page for more information and a case study.