Frequently Asked Questions

Generative Engine Optimization (also known as GEO) is the practice of designing and structuring content so it’s reliably discovered, trusted, and surfaced by generative AI systems (LLMs and multimodal answer engines), rather than only by traditional index-and-click search engines.

GEO has been evolving at a rapid pace, building on the same principles that made SEO useful (clear signals, authoritative sources, and good information architecture) while adapting them to a world where answers are often generated, summarized, or surfaced conversationally through AI platforms. For marketing teams, GEO should be treated as an important new capability in the content toolkit, not a replacement for SEO, and certainly not a replacement for original thinking and higher-level marketing strategy.

At SJR, we’ve supported clients across the full spectrum of adaptations to AI, from pragmatic tweaks that preserve existing Search gains, to careful experiments with AI model-aware formats and provenance signals.

If you’re wondering how to put all that into action, start by consulting the FAQ below.

Category 1

Foundational Concepts

Focus: Introduce basic terms and principles for users new to GEO.

What is Generative Engine Optimization (GEO) and how is it different from traditional SEO?

- GEO is the practice of designing and structuring content so it’s reliably discovered, trusted, and surfaced by generative AI systems (LLMs and multimodal answer engines), rather than only by traditional index-and-click search engines.

- Where SEO optimizes for crawler indexation and ranking signals, GEO optimizes for model-aware discovery: clear provenance, precise context, structured inputs, and answer quality. So a model will (a) pick your content as a source, (b) summarize or quote it accurately, and (c) add a link or call to action that routes users back to your site.

- Why does it matter for brands? Generative systems increasingly synthesize answers from many sources. If you want those systems to surface your content — and to drive qualified traffic, leads, or brand signals from those surfaces — you must design for how models read, trust, and cite material, not just for how people click.

How has search evolved, and what does it mean for my content strategy?

- Historically, search prioritized keyword matching, backlinks, and ranking algorithms (e.g., PageRank) that routed users to pages.

- Search performance was measured by SERP rank and click-through rates; content scale relied on keywords, on-page SEO, and link-building.

- This era favored pages optimized for discovery rather than tight, cited answers.

- Search is shifting to conversational, multimodal, and zero-click experiences: concise answers, AI Overviews, and direct actions (book, map, call).

- AI engines now value citation-ready content, structured data, and multimedia that work in summaries and voice/chat surfaces.

- Brands must optimize for being the source behind answers, not just a ranked result.

Where do large language models (LLMs) learn and retrieve content?

LLMs pull from three types of signals:

- Foundational sources (to build long-term trust): high-authority news, government sites, academic research, Wikipedia, digitized books, open repositories (e.g., Common Crawl), GitHub/technical docs, industry whitepapers, and your owned pages (blogs, press, product docs).

- Influence channels (to spread expertise and authority): news mentions, media coverage, thought leadership on X/LinkedIn, Substack/Medium, vertical niche sites, and community Q&A (Reddit, Stack Overflow, review sites).

- Real-time signals (to keep content current): frequently updated pages, engagement/interaction data, trending social posts, and multimedia (video, podcasts, transcripts) that show freshness and relevance.

How can AI search optimization improve search results for my brand?

- Be cited, not buried: Structured pages (schema, canonical hubs, clear facts) raise the odds your content is named in AI summaries and conversational answers.

- Cleaner answers, fewer errors: Retrieval-anchored content (sources, quotes, links) gives models verified material, reducing noise and misunderstandings.

- Multimodal discoverability: Transcripts, alt text, and concise summaries help your videos, podcasts, and images appear in AI-led results.

- Measurable impact: Track citation rate, zero-click actions (calls, bookings, map opens), and downstream conversions—not just classic CTR.

- Caveat: GEO works when signals are strong. You still need fresh, high-quality sources and a human-in-the-loop governance process to limit hallucinations and bias.

Category 2

Technical Foundations

Focus: Provide actionable steps for implementing GEO on websites.

What are the best practices for creating content that AI models will cite accurately?

- You can’t fully prevent model hallucinations — that behavior is intrinsic to the provider’s LLM — but you can dramatically reduce risk and manage impact.

- Anchor model outputs to verified sources via a robust RAG pipeline, enforce inline citations and provenance metadata, and gate high-risk content with human review.

- Add automated validators (source-matching checks, citation confidence thresholds, and simple fact-check rules) to catch likely errors before publication, keep authoritative pages fresh, and log every prompt and revision for audit and rollback.

- Measure hallucination/error rate and user escalation metrics so you can iterate.

What technical updates should I make to my website to ensure AI systems can find and trust my content?

- Optimize machine-readable signals and site health so model-driven interfaces can find, trust, and surface your content.

- Implement schema markup, clear content types, sitemaps, and canonical tags so generative systems can access and parse your pages for AI answer engines.

- Implement site health and discoverability checks (learn more here).

- Models still rely on indexed, accessible pages. Ensure crawling is allowed (robots.txt) and your CDN/hosting config does not block bots.

- Make important content easily findable via internal links and clear category pages — RAG systems surface pages that are discoverable in your site graph.

- Make key content available in textual form (transcripts or text versions of interactive media) so models can parse and cite it.

- Support text with high-quality images and videos (with captions/transcripts) so multimodal engines can use non-text assets.

- Verify that structured data matches visible page text. Schema should accurately reflect the content models will read.

- All of this will help with ARO (Answer Retrieval Optimization).

What is ARO and how does it relate to GEO and AI Search Optimization (AISO)?

- ARO (Answer Retrieval Optimization) is the technical layer that increases your odds of being discovered, selected, and cited by AI systems. Think of it as the retrieval foundation beneath your GEO/AISO strategy.

- Purpose: Make your content machine-readable and retrieval-ready so answer engines can parse, rank, and cite it.

- Core tactics: schema/JSON-LD, canonical hubs, clean internal links, XML sitemaps & robots hygiene, stable URLs + section anchors, semantic headings, fact tables & source lists, author/date/provenance metadata, frequent lastmod, multimedia transcripts/alt text, media sitemaps, and RAG-readiness (chunkable sections, downloadable assets, public docs/APIs).

- How it fits: GEO = end-to-end program (strategy, governance, creative, measurement). AISO = channel strategy to win on AI search surfaces. ARO = technical foundation powering both.

How do I ready my website for an evolving future of search?

- It requires a number of changes. You need to implement schema markup, canonical tags, sitemaps, and descriptive metadata so models can parse and cite your pages.

- Publish authoritative, evergreen pages with clear intent signals and inline citations or source lists.

- Add transcripts and alt text for multimedia, enable API/endpoint access for RAG, and keep content fresh with versioning and audit logs.

How can brands make sure they’re the source models cite?

- While there are no guarantees, these are the strategies that work best:

- Publish canonical knowledge hubs (FAQs, explainers, product docs) with clear intent signals so RAG systems can index them.

- Add structured data and provenance metadata (schema, author, publish date, source lists) to make pages machine-readable and citation-friendly.

- Offer multimodal assets and transcripts (video, audio, images with alt text) to capture Search Live and multimodal retrieval.

- Keep high-value pages fresh and link them from authoritative sections (press, research, or resource centers).

- Encourage trusted citations (PR placements, academic partnerships, industry mentions) and make content easy to reference (stable URLs, clear quotes, downloadable assets).

- Implement AI content audit (learn more here).

Category 3

Content Strategy

Focus: Guide users on creating and optimizing content for GEO.

How should we write for consistent, on-brand generative outputs?

- Use templates with: context, target persona, tone, required citations, prohibited claims, and preferred formats (Q/A, short summary, steps).

- Include canonical sources and schema snippets to increase the chance AI Overviews will cite your content.

- Version prompts and track which briefs produce cited, low-revision outputs.

When should we use human writers + AI vs. fully automated generation?

- The most successful brands use a hybrid (human + AI) approach.

- We’ve found that original, breakthrough content typically generated by humans still wins.

- Google’s Head of Search backs the idea that authentic human POVs in multimodal formats attract deeper engagement and higher-quality traffic.

- “People are increasingly seeking out and clicking on sites with forums, videos, podcasts, and posts where they can hear authentic voices and first-hand perspectives. People are also more likely to click into web content that helps them learn more — such as an in-depth review, an original post, a unique perspective or a thoughtful first-person analysis”

- In short, you still need humans for large parts of your content: brand messaging, expert analysis, regulated copy, and any high-stakes material.

- However, you may find automated generation to be worthwhile for low-risk, high-volume tasks like product descriptions, short metadata, and simple summaries.

- But ensure you set quality thresholds (e.g., citation likelihood, confidence/accuracy scores) that trigger human review.

- And continuously measure the cost vs. quality tradeoffs by content type so you can tighten or loosen human intervention over time.

Category 4

Brand and Business Readiness

Focus: Help brands align their governance, attribution, and business goals with GEO.

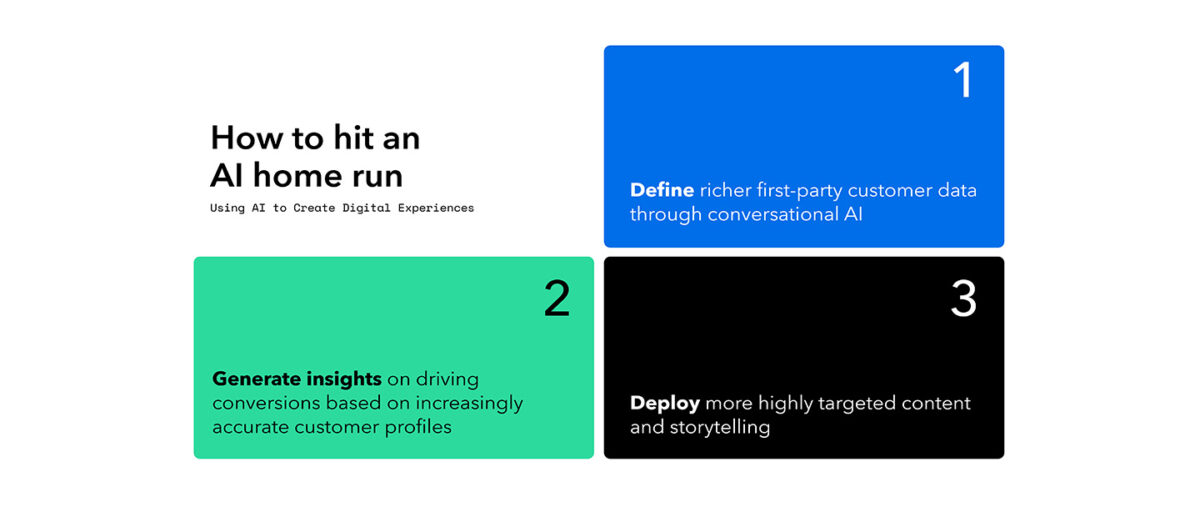

How do I prepare my brand and business for AI search optimization?

- Again, there are various things you need to do at once. For example, you need to ensure you have strong brand governance: editorial standards, prompt/playbook libraries, attribution rules, and human-in-the-loop checkpoints.

- You also need to create canonical knowledge hubs (FAQ, product pages, help center) designed for citation and reuse by models.

- Additionally, train your teams on the same foundational SEO best practices for AI features as you do for Google Search overall: making sure the page meets the technical requirements for Google Search, following Search policies, and focusing on the key best practices, such as creating helpful, reliable, people-first content.

Should we focus our entire content strategy on GEO?

- No. GEO helps content get discovered in model-driven interfaces, but it should be one channel in a balanced content mix.

- Prioritize GEO for discoverability and answer quality, and pair it with original, human-authored thinking (in-depth posts, reviews, videos, podcasts) that build distinct brand authority and drive deeper engagement.

- See: Google’s recent note on how authentic voices and first-person perspectives continue to attract clicks.

What testing and rollout process should teams follow for GEO initiatives?

- You should start with small, measurable bets: state a clear hypothesis, run a focused experiment with a control and a variant (A/B), and track meaningful KPIs.

- Let the test run long enough to gather reliable signals before you decide.

- Roll out cautiously: Deploy winners incrementally (subset of pages or traffic), and put rollback guardrails in place — e.g., automatic revert for sharp accuracy drops, spikes in support incidents, or brand-safety flags.

- Document every prompt, tweak, and result in a governed prompt library so your team can reproduce, audit, and learn.

- When you have repeatable wins, scale thoughtfully: extend experiments into multimodal assets (video transcripts, images) and local/commerce scenarios (listings, reviews) as AI Modes and live features evolve.

- Always measure downstream impact on engagement and conversions, not just surface-level adoption.

Category 5

Advanced Insights and Metrics

Focus: Address complex questions around measurement, testing, and advanced use cases.

What quality and performance metrics should we track for GEO?

Right now visibility into AI answer-engine metrics is limited (platforms often don’t tag AI-surface clicks), but we’re watching closely. Here’s what we’ve learned so far:

- Sites shown in Google’s AI features (like AI Overviews and AI Mode) are included in Google Search Console’s Performance report under the “Web” search type.

- Use that report to see overall traffic changes, then combine it with Google Analytics (or your analytics tool of choice) to track conversions and time on site.

- We’ve also observed that clicks from pages with AI Overviews tend to be higher quality — users often spend more time on the site after those clicks.

- For all AI answer engines, it helps to monitor “zero-click” impact and secondary actions (map clicks, calls, bookings) that indicate value even without a page click.

- You can also supplement metrics with operational proxies: Use query-level inference and third-party trackers, UTM/landing-page tagging and referrer heuristics, branded-search lift, and citation/backlink monitoring to capture visibility and impact even when platforms don’t label AI-surface clicks.

How do we prevent hallucinations, factual errors, and plagiarism in model outputs?

- You can’t fully prevent model hallucinations — that behavior is intrinsic to the provider’s LLM — but you can dramatically reduce risk and manage impact.

- Anchor model outputs to verified sources via a robust RAG pipeline, enforce inline citations and provenance metadata, and gate high-risk content with human review.

- Add automated validators (source-matching checks, citation confidence thresholds, and simple fact-check rules) to catch likely errors before publication, keep authoritative pages fresh, and log every prompt and revision for audit and rollback.

- Measure hallucination/error rate and user escalation metrics so you can iterate.

Category 6

Trends and Future Outlook

Focus: Discuss broader trends and implications of AI-driven search.

Will advertising still work in AI search, and how should brands think about ads on AI platforms?

- Advertising remains a potential channel on AI platforms, but it’s evolving to include more native, integrated formats rather than only traditional search ads — so brands should prepare for citations and actions, not just clicks.

- Here’s the current landscape:

- Some platforms (e.g., Perplexity) are already testing native placements like sidebar videos and “sponsored follow-ups”; others (CoPilot, Gemini, ChatGPT) are either routing ads through existing ad networks or still considering in-tool ad formats.

- Some players (e.g., Grok/X) enable sponsored content creation without in-tool placement.

- What does this mean for brands?

- Expect fewer direct SERP clicks and more emphasis on being the cited source or the provider of a seamless follow-up action (book, call, add to cart). Ads will need to fit the conversational UX and respect provenance/disclosure norms.

- There’s a measurement shift:

- Success will be measured by citations, downstream conversions and secondary actions (calls, bookings, map opens), not just CTRs — track zero-click value and end-to-end attribution.

- Here are some immediate tactics to get started:

- Pilot native tests

- Make landing assets citation-ready (canonical pages, schema, short authoritative snippets)

- Align creative to multimodal formats (video + transcripts)

- Set governance for disclosure and compliance.

- The long game is to invest in authoritative content and PR so platforms have high-trust sources to cite; and always monitor platform ad policies and be ready to adapt placements as AI answer engines and monetization evolve.

Are younger audiences really switching to AI-first search, and what does that mean for brands?

- Yes — younger users are adopting AI-first search habits far faster, which means brands need to compete to be the answer, not just rank for it.

- What’s happening: younger cohorts show a much higher preference for AI Overviews and conversational answers over traditional organic links, signaling a shift toward speed, convenience, and direct responses.

- Why it matters: as AI-first behavior scales, traffic won’t just move — attention will consolidate into zero-click answers and AI summaries that cite a handful of sources. Brands that aren’t citation-ready will lose visibility even if they keep ranking in classic SERPs.

- Immediate actions:

- Prioritize concise, canonical pages designed for quick citation (short summaries, clear facts, schema, and stable URLs)

- Create mobile-first, multimodal assets (video + transcripts)

- Run experiments measuring citation rate and downstream conversions, not just SERP rank.

- Long game: invest in authoritative content hubs and PR/partnerships that generate high-trust signals so AI systems choose your brand as the source of truth.

What should I read to get up to speed on GEO, AI search, and advertising in LLMs?

- AI Search – essential reads

- Transactional AI Traffic: A Study Of Over 7 Million Sessions (Search Engine Journal): Empirical data on where AI-driven queries are converting and which sessions remain transactional.

- 100+ Incredible ChatGPT Statistics & Facts in 2025 (Notta): Quick reference for adoption, usage, and demographic signals you’ll want to map to audience strategy.

- Google AIO Impact — SEO & PPC CTRs at All-Time Low (Seer Interactive): Practical evidence of “zero-click” effects and what that means for classic SEO/PPC measurement.

- The First-Ever UX Study of Google’s AI Overviews (Search Engine Journal): UX insights showing how people interact with AI Overviews and which content formats get cited.

- From Queries to Conversations: How AI Chatbots Are Redefining Search (VML)

- Advertising in LLMs:

- Perplexity CEP Says Its Browser Will Track Everything to Sell ‘hyper-personalized’ ads (TechCrunch): Signals about privacy, tracking, and potential for highly personalized ad experiences.

- Google Sees Ad Potential in Gemini AI (Search Engine Land): How major platform owners are thinking about ad placements within AI results.

- Inside the Deck Perplexity Is Using to Pitch Advertisers (AdWeek): Practical view of early native formats (sponsored follow-ups, cards) and value props advertisers will see.

- A Peek Behind Perplexity’s Nascent Ads Business (AdExchanger): Operational read on how platforms are experimenting with monetization models.

- ChatGPT with ads: ‘Free-user monetization’ coming in 2026? (Search Engine Land): Timeline and implications for brands if conversational platforms add ad tiers.